Cloud Security Due Diligence

Its easy to assume the public cloud is secure. Digging deeper into tools, using Azure as an example is more tricky. Proper due diligence is required.

In my role I encounter two types of customer, one that loves all the new shiny things and is used to working in the cloud, and ones which don't. They often have their own data centre(s) and generally work on-premise. There are many reasons for this, but their primary concern is security. Its all very well trusting a cloud provider, but they need to be able to dig deeper and do their own proper due diligence, but where do they start?

For this I'll be looking a Microsoft Azure but other cloud providers will have similar documentation.

You'll notice that I'm providing lots of links, and if you follow them there are more links that lead to more links. The documentation is very comprehensive which is why I'm only just touching the surface. This is not an exhaustive list of resources, it's just a starting point.

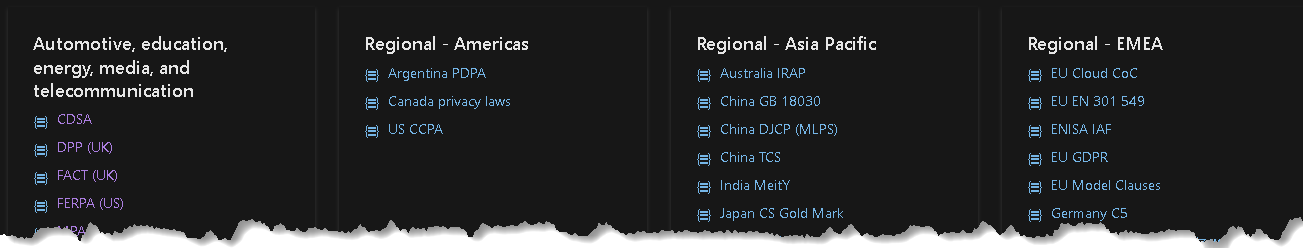

Azure Compliance Offerings

Firstly, it's worth finding out what assessments have been done on data-centres and what certifications have been achieved. Microsoft has an interesting site called Azure Compliance Offerings which should be your first stop.

Not only does this have legal and regulatory compliance information for nations it also has the same for certain industries too.

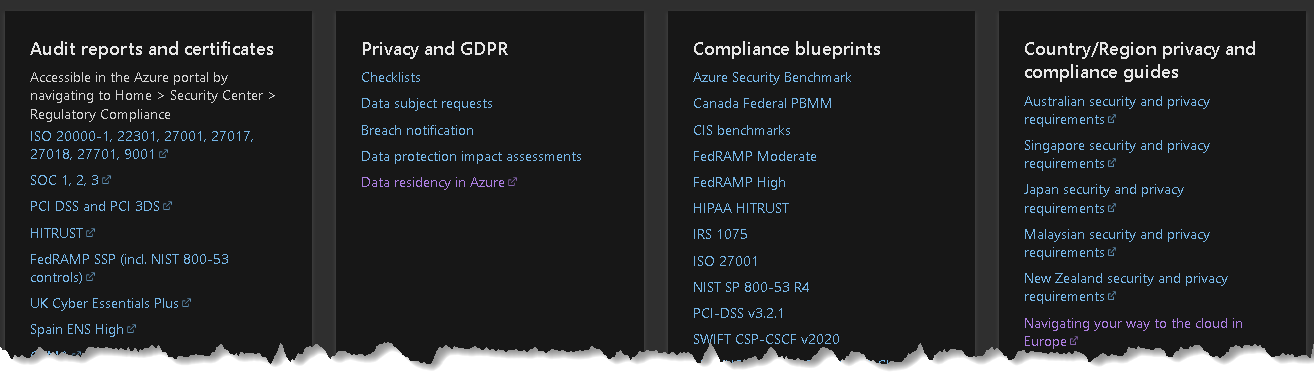

scrolling down also shows a load of useful links to audit reports, certificates privacy, GDPR and more.

Microsoft has a caveat which is mentioned in the documentation which says;

Customers are wholly responsible for ensuring their own compliance with all applicable laws and regulations. Information provided in Microsoft online documentation does not constitute legal advice, and customers should consult their legal advisors for any questions regarding regulatory compliance.

Who owns my data?

When I sign up to a new company, they always offer me options to get marketing mail and to use my data to learn more about me. Some of the time this is OK when I'm buying something like a hair-drier, but when I'm preparing to send lots of sensitive data into the cloud, I likely don't want my data to be used by anyone but me.

A useful site to start your research is the Microsoft Trust Centre where the first commitment regards data where they say

1. You control your data.

2. We are transparent about where data is located and how it is used.

3. We secure data at rest and in transit.

4. We defend your data

We can dig deeper at the Privacy page which covers things such as

- Controlling your data

- Where your data is located (known as data residency)

- Securing your data

- Defending your data

Data Residency

Knowing where your data is located is of critical importance, not just from a performance (e.g. network, redundancy and cost) point of view but most importantly about security.

Before we get any further, you should understand Azure Geography which

contains one or more regions and meets specific data residency and compliance requirements

and Azure Regions which are

a set of data centres, deployed within a latency-defined perimeter and connected through a dedicated regional low-latency network.

Now that we know the basics we need to visit Data Residency in Azure which has brief details about regional and non-regional services. Choose your geography for more details from the drop-down, in particular the more information section which may have some small print about the tools you're researching.

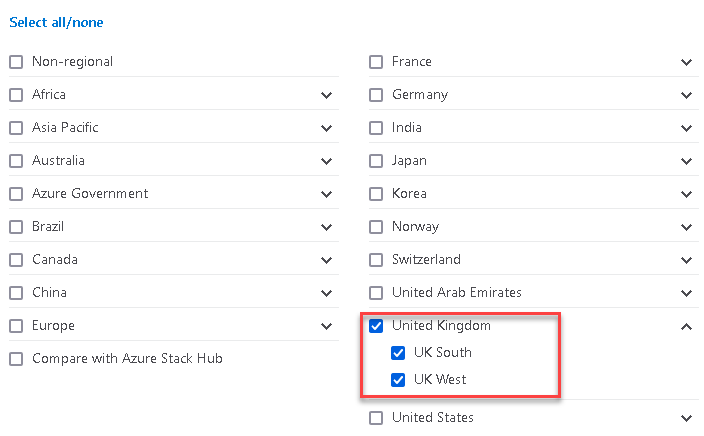

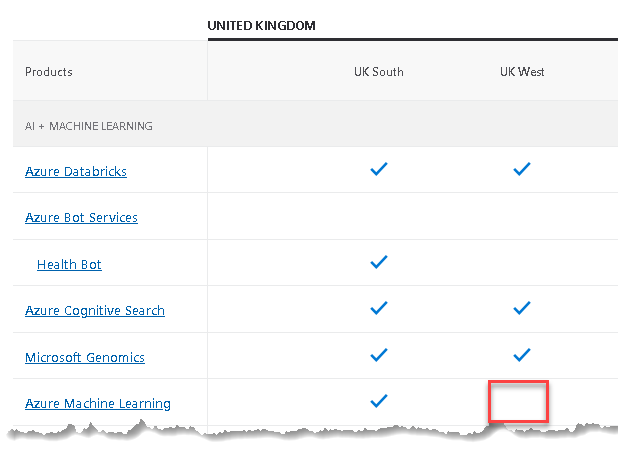

The next page to read is Azure Products by Region where you can discover the tools available in your local region. Its important to ensure you use the filter to choose regions of interest. I'm in the UK so I want to choose the UK regions;

It's interesting to see which tools and other resources (such as Virtual Machines) are (or not) available in each data centre. This is important for some tools when you consider high availability or global distribution when data may be sent to other data centres. In this example Azure Machine Learning is not available in the UK West region.

Other Considerations

Now that we have a basic understanding we need dig deeper into the technical aspects of;

- Data in Transit

- Data at Rest (stored temporarily or permanently)

- Data Processing (when it's being used by a tool)

- Who has access to stored data (e.g. support engineers)

Data in Transit

Data in Transit means data on the move, whether it's between you and Azure or between Azure components such as VMs, databases and between data centres. Data needs to be encrypted so a man in the middle cant read your data. A comprehensive Azure introduction can be found here . You must also refer to any specific tooling documentation. For example, the Azure Cognitive Services has its own security documentation that needs to be read in addition to the Azure documentation.

Data at Rest

Data at Rest is when the data is not travelling, but it is stored in a database, a disk or some other medium. A good overview is here which leads to a more detailed discussion of data encryption at Rest.

The Encryption at Rest designs in Azure use symmetric encryption to encrypt and decrypt large amounts of data quickly according to a simple conceptual model:

- A symmetric encryption key is used to encrypt data as it is written to storage.

- The same encryption key is used to decrypt that data as it is readied for use in memory.

- Data may be partitioned, and different keys may be used for each partition.

- Keys must be stored in a secure location with identity-based access control and audit policies. Data encryption keys which are stored outside of secure locations are encrypted with a key encryption key kept in a secure location.

Data While Processing

When I say processing, I don't mean the kind where its processed to sell you something, but when your chosen tool actually uses the data to do work. For example, the Computer Vision AI is processing data to find peoples faces. This is usually scenario dependant due to each tool using a number of different resources. The case study below will illustrate this.

Case Study - Machine Learning

I've mentioned above that it's really important to research your chosen tooling thoroughly. A tool may use multiple other tools. For this case study we'll look into Data Encryption in Machine Learning. This uses Blob Storage, CosmosDB, Container Registry, Container Instance, Kubernetes Service, Data Bricks and Compute (which also contains a data disk and an OS disk). To be thorough, you would need to look at each individual resource documentation to find out specific details of its security features.

From the link above, there's some really useful insights given that explains how the actual tool works

Compute cluster The OS disk for each compute node stored in Azure Storage is encrypted with Microsoft-managed keys in Azure Machine Learning storage accounts. This compute target is ephemeral, and clusters are typically scaled down when no runs are queued. The underlying virtual machine is de-provisioned, and the OS disk is deleted. Azure Disk Encryption isn't supported for the OS disk.

Each virtual machine also has a local temporary disk for OS operations. If you want, you can use the disk to stage training data. If the workspace was created with the hbi_workspace parameter set to TRUE, the temporary disk is encrypted. This environment is short-lived (only for the duration of your run,) and encryption support is limited to system-managed keys only.

There are two points that jump out, firstly it mentions the hbi_workspace parameter and secondly encryption is limited to system managed keys on the VM. Remember this is tool specific, but lets look at what this means.

hbi_workspace parameter

A Workspace is a fundamental resource for machine learning in Azure Machine Learning. You use a workspace to experiment, train, and deploy machine learning models. Each workspace is tied to an Azure subscription and resource group, and has an associated SKU. from here

Specifies whether the workspace contains data of High Business Impact (HBI), i.e., contains sensitive business information. This flag can be set only during workspace creation. Its value cannot be changed after the workspace is created. The default value is False.

When set to True, further encryption steps are performed, and depending on the SDK component, results in redacted information in internally-collected telemetry. For more information, see Data encryption.

When this flag is set to True, one possible impact is increased difficulty troubleshooting issues. This could happen because some telemetry isn't sent to Microsoft and there is less visibility into success rates or problem types, and therefore may not be able to react as proactively when this flag is True. The recommendation is use the default of False for this flag unless strictly required to be True.

Managed Keys & Bring Your Own Storage

As we read about in the data at rest section and the machine learning case study, they both mention "system managed keys". By default, data is encrypted with Microsoft-managed keys. There is the option to manage your own keys in Key Vault which is documented here. You can also choose in some cases to Bring your own storage (BYOS) which is an interesting option to research.

With Bring Your Own Storage, these artifacts are uploaded into a storage account that you control. That means you control the encryption-at-rest policy, the lifetime management policy and network access. You will, however, be responsible for the costs associated with that storage account.

This quote is from here

Who has access to stored data?

When you think about what is going on behind the scenes, there is a lot of data being created about what your tool is doing - logs, resource metrics, errors, result-sets and more. If there's a problem, you may open a support ticket to get some help. Some customers may not want support to be able to see what's going on, perhaps because support engineers will be based in an unknown location. This is where Customer Lockbox is useful.

Most operations, support, and troubleshooting performed by Microsoft personnel and sub-processors do not require access to customer data. In those rare circumstances where such access is required, Customer Lockbox for Microsoft Azure provides an interface for customers to review and approve or reject customer data access requests. It is used in cases where a Microsoft engineer needs to access customer data, whether in response to a customer-initiated support ticket or a problem identified by Microsoft.

Again there are tool specific documents to read. For example, Customer Lockbox in Cognitive Services you need to request a particular SKU that will take 3-5 business days to enable!

Summary

This has been a really quick tour of Azure security. I didn't fully understand the breadth and depth of the subject until I started to write this post. The most important thing to remember is that you need to research the specific tools you will be using (and potentially the tools they use too). They all have their own complexities and nuances.

Resources (yes more links!)

A variety of links to Azure Best Practices

Azure data security best practices